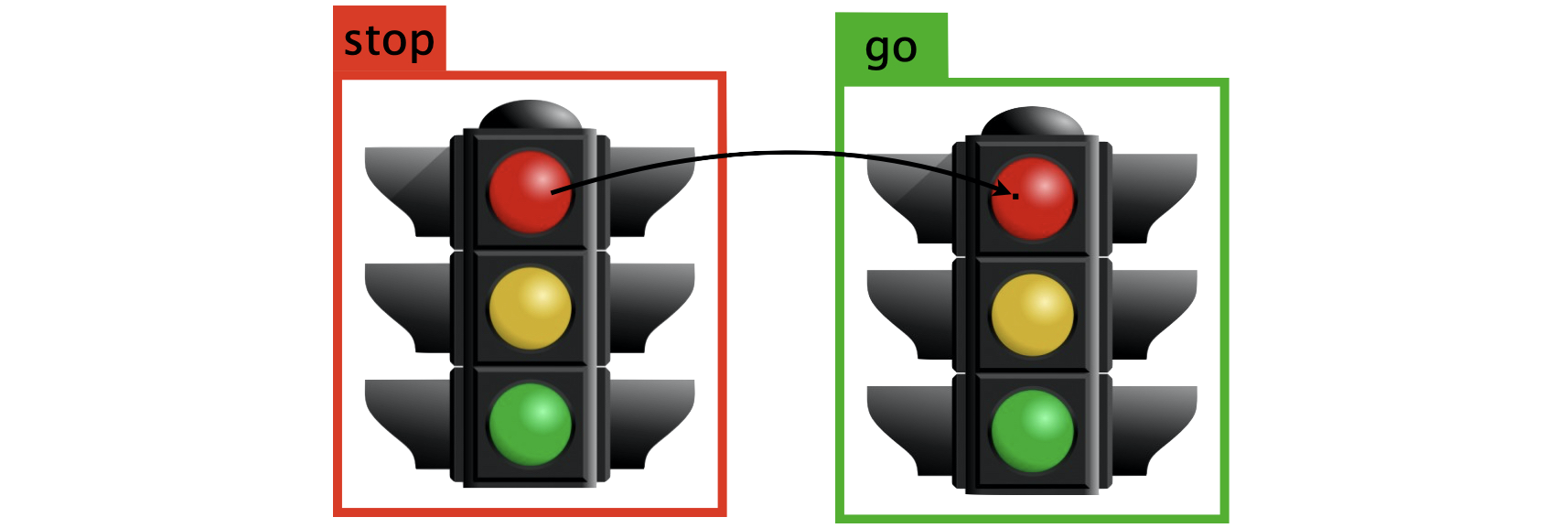

Did you notice the stray pixel on the right side in the image above? Your convnet would have! And possibly misclassified a red stop light as green which could have severe outcomes.

A machine learning model can be mislead by adding a small, imperceptible perturbation to an image. This is known as adversarial examples. Studying this phenomenon is important from a safety point of view. For neural networks in particular it can also offer insights into what features models learn and what the limitations of the learned representations are. Put differently, the existence of adversarial examples shows that vision systems live in a very different world.

In this project we review selected references to present the current state of research of that topic. To learn more about how they work we implement selected attack methods in Jupyter notebooks and explain the steps. We then compare the effectiveness of these attacks in the results section.