Setting coreml model thresholds at runtime

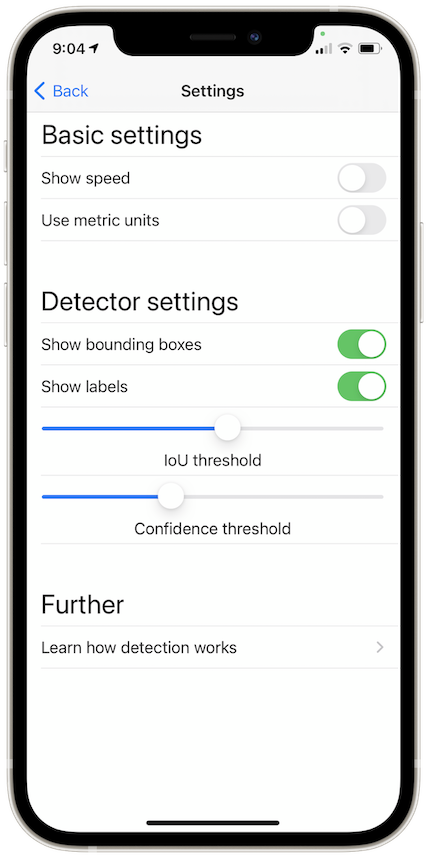

One and two stage detectors like YOLO and Faster R-CNN are parametrized with two thresholds Intersection over Union (IoU) and confidence. In our app these thresholds can be changed at runtime in the settings menu, see figure 1. In this post, I am going to explain how we implemented this functionality.

Reminder: IoU and confidence

Before we get into the implementation, a quick review of what these two thresholds do. Intersection over union is a metric used in the non-max-suppression stage of one and two stage object detectors. These models often generate many redundant boxes by design. Non-max suppression removes these redundant bounding boxes and keeps the best box per object. Boxes are considered redundant if their IoU exceeds a threshold, see section post-processing for more details.

The confidence threshold lets you tune the ratio of false positives to false negatives. In general, if the confidence threshold is low fewer positives are missed at the cost of a higher number of false positives. The model’s output softmax layer’s values are compared with the threshold. Only if the threshold is exceeded does the model output a prediction for that class.

Thresholds in the model

In the model export step with coremltools you set which inputs are available. By default this is the image input, but you can add optional inputs such as the thresholds.

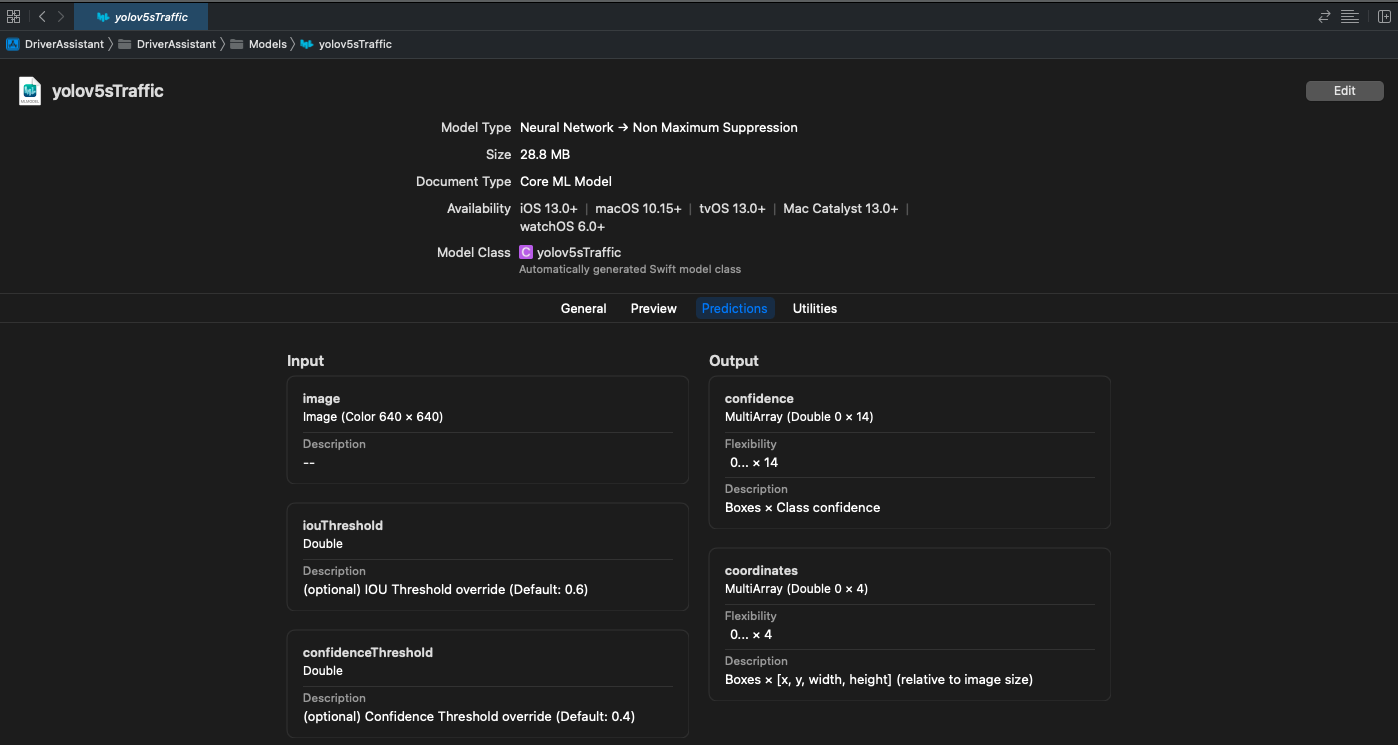

Once you have an exported model in your Swift project, the first step is to confirm that the two thresholds are available as inputs to the model. To do so, click on the model file in your Xcode project, for example yolov5sTraffic.mlmodel, and then go to the Predictions tab, see figure 2.

Alternatively, you can inspect the defined input parameters in the model class of your detection model. This is the class which Xcode generates automatically when you import a model as .mlmodel. To inspect it click on the class name in the model view. For example, our model yolov5sTraffic has the following three inputs parameters:

1

2

3

4

5

6

7

8

9

10

11

12

class yolov5sTrafficInput : MLFeatureProvider {

/// image as color (kCVPixelFormatType_32BGRA) image buffer, 640 pixels wide by 640 pixels high

var image: CVPixelBuffer

/// (optional) IOU Threshold override (Default: 0.6) as double value

var iouThreshold: Double

/// (optional) Confidence Threshold override (Default: 0.4) as double value

var confidenceThreshold: Double

var featureNames: Set<String> {

get {

return ["image", "iouThreshold", "confidenceThreshold"]

}

}

Setting the thresholds

In our app, we call the model with the VNCoreMLRequest() method. If we don’t provide additional values for the optional thresholds, the model uses the default values.

To pass in values for the thresholds, we use a ThresholdProvider() object, which inherits from the MLFeatureProvider and is explained below. This object allows us to set the optional inputs for the model.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

private var requests = [VNRequest]()

private var thresholdProvider = ThresholdProvider()

// Define threshold values. These could also be defined elsewhere in the code so that they can

// be updated by the user.

let iouThreshold: Double = 0.7

let confidenceThreshold: Double = 0.9

// Run the model

let modelURL = Bundle.main.url(forResource: "yolov5sTraffic", withExtension: "mlmodelc")

let visionModel = VNCoreMLModel(for: MLModel(contentsOf: modelURL))

let objectRecognition = VNCoreMLRequest(model: visionModel, completionHandler: { (request, error) in

DispatchQueue.main.async(execute: {

if let results = request.results {

// Update thresholds

self.thresholdProvider.values = ["iouThreshold": MLFeatureValue(double: self.iouThreshold),

"confidenceThreshold": MLFeatureValue(double: self.confidenceThreshold)]

visionModel.featureProvider = self.thresholdProvider

// [...]

}

})

})

The values for the iouThreshold and confidenceThreshold are set on the thresholdProvider object each time the model is called, see line 16. In this example, the thresholds are constants defined in lines 6 and 7, but in our app we grab them from the slider using the UserDefaultsclass:

let iouThreshold: Double = UserDefaults.standard.double(forKey: "iouThreshold")

let confidenceThreshold: Double = UserDefaults.standard.double(forKey: "confidenceThreshold")

Let us look at the ThresholdProvider class now to see how these optional inputs are set.

1

2

3

4

5

6

7

8

9

10

11

12

class ThresholdProvider: MLFeatureProvider {

open var values = [

"iouThreshold": MLFeatureValue(double: 0.6),

"confidenceThreshold": MLFeatureValue(double: 0.9)

]

var featureNames: Set<String> {

return Set(values.keys)

}

func featureValue(for featureName: String) -> MLFeatureValue? {

return values[featureName]

}

}

We use the dictionary values where we define the names of the thresholds as they appear in the yolov5sTraffic model class as keys and initial values. The two functions featureNames and featureValue then set the values for the specified variables.

When we call the model with the VNCoreMLRequest you can see that we set the thresholds by updating the values of the dictionary, and then applying the featureProvider on the model object visionModel.

Summary

Setting the thresholds for IoU and confidence of the pipelined model at runtime can be accomplished with the MLFeatureProvider class if the detection model is used with a VNCoreMLRequest. To do so, we first have to set these thresholds as optional inputs in the model export step in coremltools and provide descriptive names. Then, these thresholds can be overridden by mapping their names to values in the MLFeatureProvider class. We update these values, for example by reading from a slider input. During each call to the model the latest values are read and applied to the model.